I'm a fan (and a sponsor) of Dear ImGUI.

I've written a couple of previous articles on it including

this one and

this one

Lately I thought, I wonder what it would be like to try to make an

HTML library that followed a similar style of API.

NOTE: This is not Dear ImGUI running in JavaScript. For that see

this repo. The difference

is most ImGUI libraries render their own text and graphics. More specifically

they generate arrays of vertex positions, texture coordinates, and

vertex colors for the glyphs and other lines and rectangles for your

UI. You draw each array of vertices using whatever method you feel like.

(WebGL, OpenGL, Vulkan, DirectX, Unreal, Unity, etc...)

This experiment is instead actually using HTML elements like <div>

<input type="text">, <input type="range">, <button> etc...

This has pluses and minus.

The minus is it's likely not as fast as Dear ImGUI (or other ImGUI)

libraries, especially if you've got a complex UI that updates at 60fps?

On the other hand it might actually be faster for many use cases. See below

The pluses are

If you don't update anything in the UI it doesn't use any CPU

In Dear ImGUI you must re-render the UI every frame if you

non UI stuff (game/app) changes.

Styling is free (use CSS)

Most ImGUI libraries have very minimal styling features but here

we get all of CSS to style.

Layout is free (word wrap, sizing, grids, spacing

Most layout happen outside the library based on css. In Dear ImGUI

all layout happens every frame by the library. Here, if we want

4 elements side by side we just surround them with flex or grid

and the browser handles layout.

Supports all of Unicode

Most ImGUI libraries only handle a small number of glyphs.

They may or may not handle colored emoji 🍎🍐🍇🐯🐻🦁🦁😉🤣

or Japanese(日本語), Korean(한국어), Chinese(汉语). I don't think

any handle right to left languages like Arabic(عربي).

Supports more fonts

This might not be fair, I'm sure Dear ImGUI can use more than one

font. The thing is it's likely cumbersome, especially multiple

sizes in multiple styles where as

the

browser

excels

at this.

Supports Language Input

Many ImGUI libraries have issues with language input. Because they

are rendering their own text they have 2nd class support for

complex input and input method editors.

Supports Accessability

Most ImGUI libraries are not very accessability friendly. Because

they just ultimately render pixels there is not much for an accessability

feature to look at. With HTML, it's far easier for the browser

and or OS to look into the content of the elements.

Automatic zoom support

The browser zooms. In Dear ImGUI you'd have to manually code zooming support?

Automatic HD-DPI support

The browser will render text and most other widgets taking into

account the user's device's HD-DPI features.

In general, less code than Dear ImGUI should be executing if you are not updating

1000s of values per frame.

Consider, Dear ImGUI is mostly stateless AFAIK. That means things like word

wrapping a string or computing the size of column might need to be done on

every render. In ImHUI's case, that's handled by the browser and if the contents

of an element has not changed much of that is cached.

Thoughts so far

So, what have I noticed so far...

Simpler to get your data in/out

What's nice about the ImGUI style of stateless UI is that you don't have to setup

event handlers nor really marshall data in and out of UI widgets.

Consider standard JavaScript. If you have an <input type="text"> you probably have

code something like this

const elem = document.createElement('input');

elem.type = 'text';

elem.addEventListener('input', (e) => {

someObject.someProperty = elem.value;

});You'd also need someway to update the element if the value changes

// when someObject.someProperty changes

elem.value = someObject.someProperty;

You now need some system for tracking when you update someObject.someProperty.

React makes this sightly easier. It handles the updating. It doesn't handle

the getting.

function MyTextInput() {

return {

<input

value={someObject.someProperty}

onChange={function(e) { someObject.someProperty = this.value; }>

}

}Of course that faux react code above won't work. You need to use state or some other solution

so that react knows to re-render when you change someObject.someProperty.

function MyTextInput() {

const [value, setValue] = useState(someObject.someProperty);

return {

<input

value={value}

onChange={function(e) { setValue(this.value); }>

}

}So now React will, um, react to the state changing but it won't react

to someObject.someProperty changing, like say if you selected a different

object. So you have to add more code. The code above also provides no way

to get the data back into someObject.someProperty so you have to add more

code.

In C++ ImGUI style you'd do one of these

// pass the value in, get the new value out

someObject.someProperty = ImGUI::textInput(someObject.someProperty);

or

// pass by reference. updates automatically

ImGUI::textInput(someObject.someProperty);

JavaScript doesn't support passing by reference so we can't do the 2nd style, OR,

we could pass in some getter/setter pair to let the code change

values.

// pass the value in, get the new value out (still works in JS)

someObject.someProperty = textInput(someObject.someProperty);

// use a getter/setter generator

textInput(gs(someObject, 'someProperty'));

Where gs is defined something like

function gs(obj, propertyName) {

return {

get() { return obj[propertyName]; },

set(v) { obj[propertyName] = v; },

};

}In any case, it's decidedly simpler than either vanilla JS or React. There is no other code to get

the new value from the UI back to your data storage. It just happens.

Less Flexible?

I'm not sure how to describe this. Basically I notice all the HTML/CSS features I'm throwing away

using ImGUI because I know what HTML elements I'm creating underneath.

Consider the text function. It just takes a string and adds a row to the current UI

ImGUI::text('this text appears in a row by itself');There's no way to set a className. There's no way to choose span instead of div or

sub or sup or h1 etc..

Looking through the ImGUI code I see lots of stateful functions to help this along

so (making up an example) one solution is some function which sets which type will be

created

ImGUI::TextElement('div')

ImGUI::text('this text is put in a div');

ImGUI::text('this text is put in a div too');

ImGUI::TextElement('span')

ImGUI::text('this text is put in a span');The same is true for which class name to use or adding on styles etc.

I see those littered throughout the ImGUI examples. As another example

ImGUI::text('this text is on its own line');

ImGUI::text('this text can is not'); ImGui::SameLine();Is that a plus? A Minus? Should I add more optional parameters to functions

ImHUI.text(msg: string, className?: string, type?: string)

or

ImGUI.text(msg: string, attr: Record<string, any>)

where you could do something like

ImGUI.text("hello world", {className: 'glow', style: {color: 'red'}});I'm not yet sure what's the best direction here.

Higher level = Easier to Use

One thing I've noticed is that, at least with Dear ImGUI, more things are decided for you.

Or maybe that's another way of saying Dear ImGUI is a higher level library than React or Vanilla JS/HTML.

As a simple example

ImGUI::sliderFloat("Degrees", myFloatVariable, -360, +360);Effectively represents 4 separate concepts

- A label ("degrees") probably made with a

<div>

- A slider. In HTML made with

<input type="range">

- A number display. In this case probably a separate

<div>

- A container for all 3 of those pieces.

So, is ImGUI actually simpler than HTML or is it just the fact that it has

higher level components?

In other words, to do that with raw HTML requires creating 4 elements,

childing the first 3 into one of them, responding to input events,

updating the number display when an input event arrives. Updating

both the number display and the <input> element's value if the value

changes externally to the UI widgets.

But, if I had existing higher level UI components that already handled is that enough

to make things easier? Meaning how much of Dear ImGUI's ease of use comes

from its paradigm and how much from a large library of higher level widgets?

This is kind of like comparing programming languages. For given language, how much of

the perceived benefit comes from the language itself and how much from the standard

libraries or common environment it runs in.

Notes in implementation

getter setters vs direct assignment

ImGUI uses C++ ability to pass by reference. JavaScript has no ability to pass by reference.

In other words in C++ I can do this

void multBy2(int& v) {

v *= 2;

}

int foo = 123;

multBy2(foo);

cout << foo; // prints 246There is no way to do this in JavaScript.

Following the Dear ImGUI API I first tried to work around this by requiring

you pass in an getter-setter like this

var foo = 123;

var fooGetterSetter = {

get() { return foo; }

set(v) { foo = v; }

};which you could then use like this

// slider that goes from 0 to 200

ImHUI.sliderFloat("Some Value", fooGetterSetter, 0, 200);Of course if the point of using one of these libraries is ease of use then

it sucks to have to make getter-setters.

I thought maybe I could make getter setter generators like the one gs shown above.

It means for the easiest usage you're required to use objects so instead of bare foo

you'd do something like

const data = {

foo: 123,

};

...

// slider that goes from 0 to 200

ImHUI.sliderFloat("Some Value", gs(data, 'foo'), 0, 200);That has 2 problems though. One is that it can't be type checked because you have

to pass in a string to gs(object: Object, propertyName: string).

The other is it's effectively generating a new getter-setter on every

invocation. To put it another way, while the easy to type code looks like the line

just above, the performant code would require creating a getter-setter at init time like

this

const data = {

foo: 123,

};

const fooGetterSetter = gs(data, 'foo');

...

// slider that goes from 0 to 200

ImHUI.sliderFloat("Some Value", fooGetterSetter, 0, 200);I could probably make some function that generates getters/setters for all properties but that

also sounds yuck as it removes you from your data.

const data = {

foo: 123,

};

const dataGetterSetters = generateGetterSetters(data)

...

// slider that goes from 0 to 200

ImHUI.sliderFloat("Some Value", dataGetterSetter.foo, 0, 200);Another solution would be to require using an object and then make all the ImHUI functions

take an object and a property name as in

// slider that goes from 0 to 200

ImHUI.sliderFloat("Some Value", data, 'foo', 0, 200);That has the same issue though that because you're passing in a property name by

string it's error prone and types can't be checked.

So, at least at the moment, I've ended up changing it so you pass in the value

and it passes back a new one

// slider that goes from 0 to 200

foo = ImHUI.sliderFloat("Some Value", foo, 0, 200);

// or

// slider that goes from 0 to 200

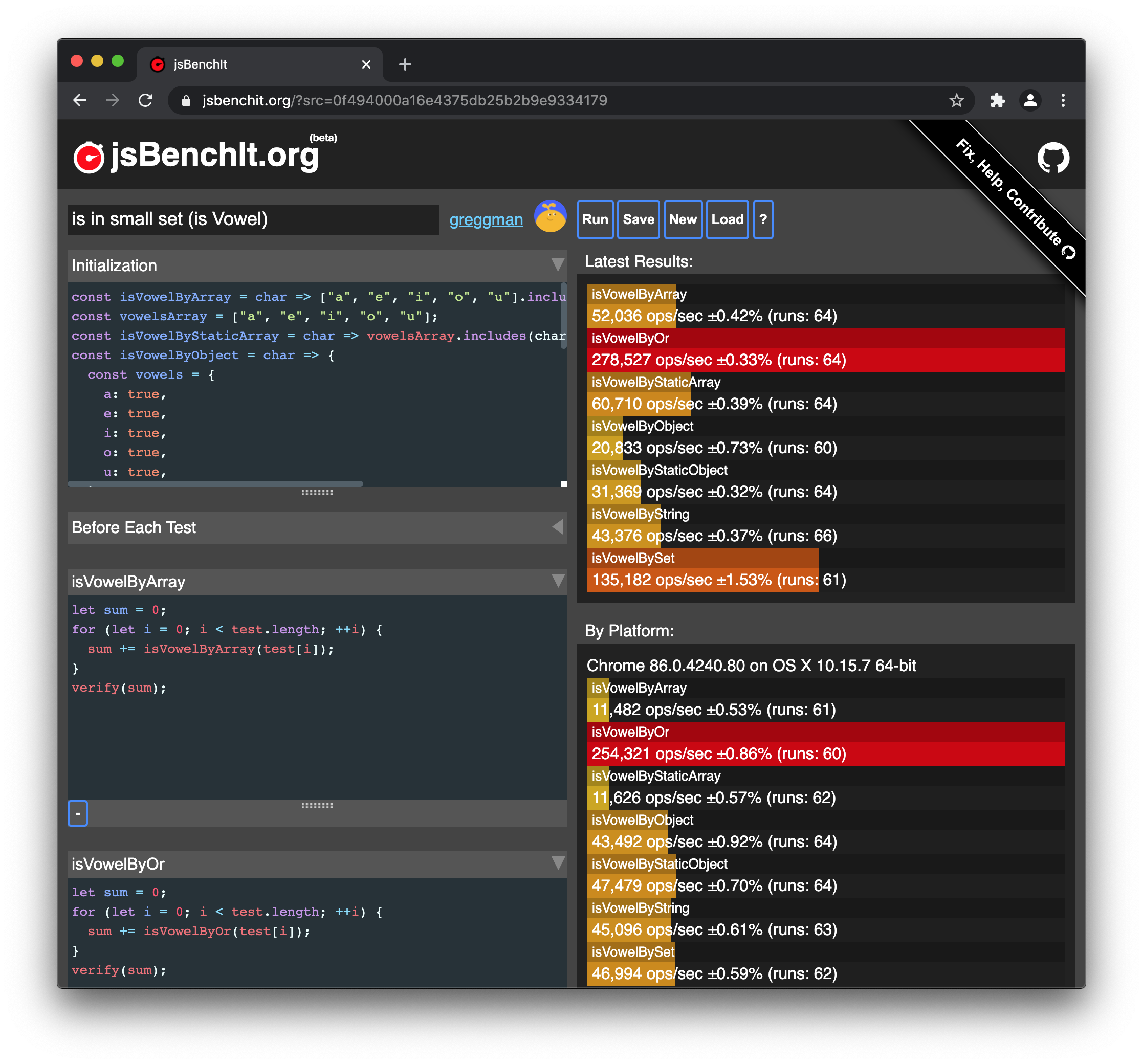

data.foo = ImHUI.sliderFloat("Some Value", data.foo, 0, 200);It's far more performant than using getter-setters, on top of being more performant

than generating getter-setters. Further it's type safe. Eslint or TypeScript can both

warn you about non-existing properties and possibly type mis-matches.

Figuring out the smallest building blocks

The 3rd widget I created was the sliderFloat which as I pointed out above

consists of 4 elements, a div for the label, a div for the displayed value,

an input[type=range] for the slider, and a container to arrange them.

When I first implemented it I made a class that manages all 4 elements.

But later I realized each of those 4 elements is useful on its own so the

current implementation is just nested ImHUI calls. A sliderFloat is

function slideFloat(label: string, value: number, min: number = 0, max: number = 1) {

beginWrapper('slider-float');

value = sliderFloatNode(value, min, max);

text(value.toFixed(2));

text(prompt);

endWrapper();

return value;

}The question for me is, what are the smallest building blocks?

For example a draggable window is currently hand coded as a combination of parts.

There's the outer div, it's scalable. There's the title bar for the window, it

has the text for the title and it's draggable to move the window around.

Can I separate those so a window is built from these lower-level parts?

That's something to explore.

Diagrams, Images, Graphs

You can see in the current live example I put in a version of ImGUI::plotLines

which takes a list of values and plots them as a 2D line. The current implementation

creates a 2D canvas using a canvasNode which returns a Canvas2DRenderingContext.

In other words, if you want to draw something live you can build your own widget

like this

function circleGraph(zeroToOne: number) {

const ctx = canvasNode();

const {width, height} = ctx.canvas;

const radius = Math.min(width, height);

ctx.beginPath();

ctx.arc(width /2, height / 2, radius, 0, Math.PI * 2 * zeroToOne);

ctx.fill();

}The canvas will be auto-sized to fit its container so you just draw stuff on it.

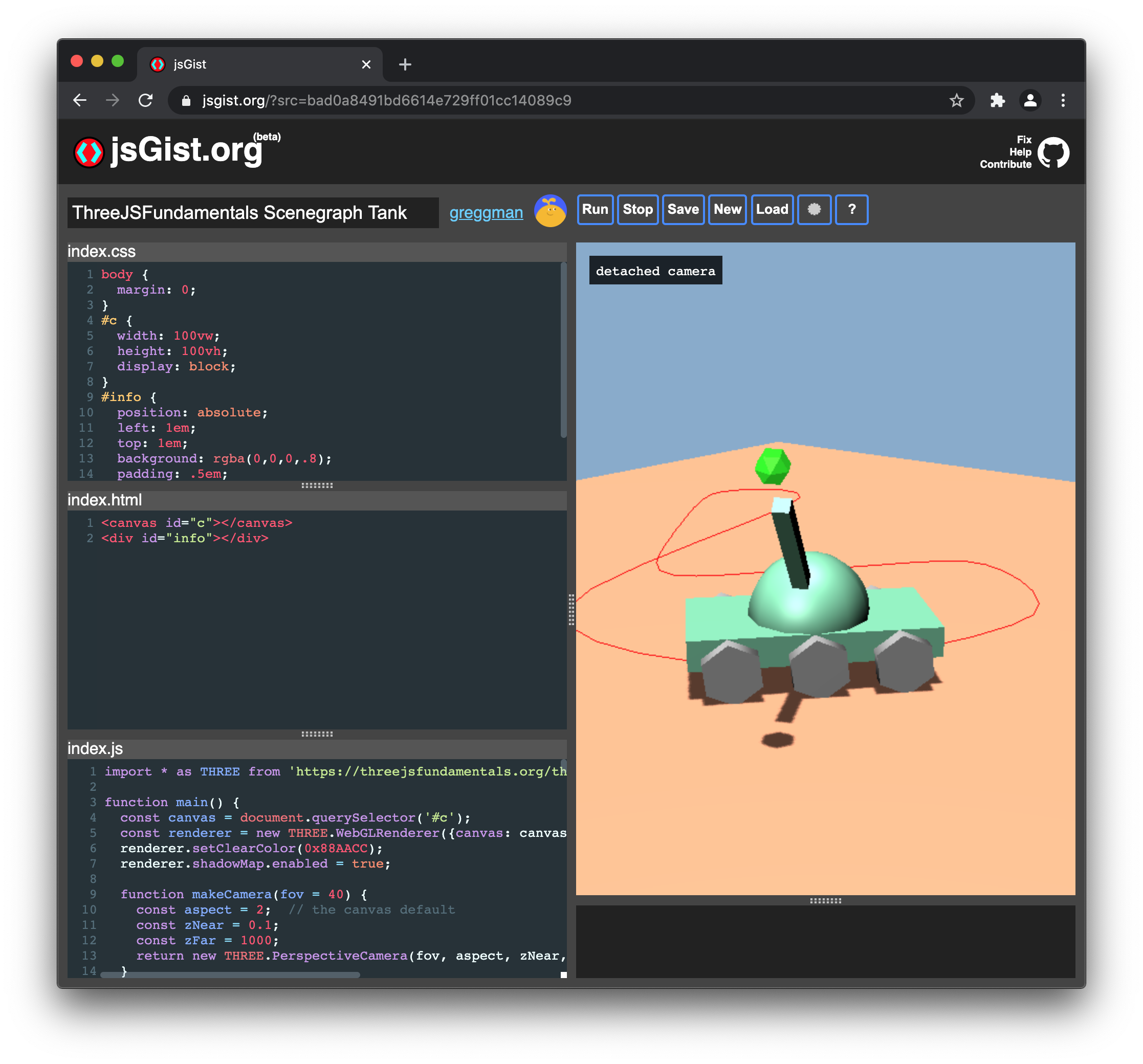

The thing is, the canvas 2D api is not that fast. At what point should I try to use WebGL

or let you use WebGL. If I use WebGL there's the context limit issues. Just something

to think about. Given the way ImGUIs work if you have 1000 lines to draw then every time

the UI updates you have to draw all 1000 lines. In C++ ImGUI that's just inserting

some data into the vertex buffers being generated, but in JavaScript, with Canvas 2D,

it's doing a lot more work to call into the Canvas2D API.

It's something to explore.

So far it's just an Experiment

I have no idea where this is going. I don't have any projects that need a GUI like this

at the moment but maybe if I can get it into something I think is kind of stable I'd

consider using it over something like dat.gui which

is probably far and way the most common UI library for WebGL visualizations.